Automating a Red Team Lab (Part 2): Monitoring and Logging

Let's take the infrastructure we made in part 1, and add some logging and monitoring so we can see what's happening behind the scenes.

In our previous post we used Packer, Ansible and Terraform to automate the creation of a domain on our ESXi server. This is great for testing out tools and techniques, but as red teamers we really need to see what's going on behind the scenes in the event logs so that when the blue team kick in our front door, we know exactly where we went wrong.

In this post we'll cover adding in some monitoring services so that we can trace events. In order to achieve this, we're going to add an Ubuntu server to our lab, and install the ELK stack across the network. As before, we're going to do all this automagically using Infrastructure-as-Code, because as the old saying goes:

"Teach a man to fish, and he'll eat for a day. Try and use infrastructure as code for everything and your blood pressure will be so high you won't care about fishing anyway".

As before, I've uploaded all the files here to play at home.

Creating A Monitoring VM

First up, let's add an Ubuntu server so we have somewhere to feed all the logs back to. I'm using Ubuntu because I already have a packer config from DetectionLab (did you check it out yet?)

Moving to our packer_files directory, let's create a config for this host ubuntu.json:

{

"builders": [

{

"boot_command": [

"<esc><wait>",

"<esc><wait>",

"<enter><wait>",

"/install/vmlinuz<wait>",

" auto<wait>",

" console-setup/ask_detect=false<wait>",

" console-setup/layoutcode=us<wait>",

" console-setup/modelcode=pc105<wait>",

" debconf/frontend=noninteractive<wait>",

" debian-installer=en_US.UTF-8<wait>",

" fb=false<wait>",

" initrd=/install/initrd.gz<wait>",

" kbd-chooser/method=us<wait>",

" keyboard-configuration/layout=USA<wait>",

" keyboard-configuration/variant=USA<wait>",

" locale=en_US.UTF-8<wait>",

" netcfg/get_domain=vm<wait>",

" netcfg/get_hostname=vagrant<wait>",

" grub-installer/bootdev=/dev/sda<wait>",

" preseed/url=https://ping.detectionlab.network/preseed.cfg<wait>",

" -- <wait>",

"<enter><wait>"

],

"vnc_over_websocket": true,

"insecure_connection": true,

"boot_wait": "5s",

"cpus": "{{ user `cpus` }}",

"disk_size": "{{user `disk_size`}}",

"guest_os_type": "ubuntu-64",

"http_directory": "{{user `http_directory`}}",

"iso_checksum": "{{user `iso_checksum`}}",

"iso_url": "{{user `mirror`}}/{{user `mirror_directory`}}/{{user `iso_name`}}",

"keep_registered": true,

"shutdown_command": "echo 'vagrant' | sudo -S shutdown -P now",

"ssh_password": "vagrant",

"ssh_port": 22,

"ssh_username": "vagrant",

"ssh_timeout": "10000s",

"memory": "{{ user `memory` }}",

"pause_before_connecting": "1m",

"remote_datastore": "{{user `esxi_datastore`}}",

"remote_host": "{{user `esxi_host`}}",

"remote_username": "{{user `esxi_username`}}",

"remote_password": "{{user `esxi_password`}}",

"remote_type": "esx5",

"skip_export": true,

"tools_upload_flavor": "linux",

"type": "vmware-iso",

"vm_name": "Ubuntu2004",

"vmx_data": {

"ethernet0.networkName": "{{user `esxi_network_with_dhcp_and_internet` }}",

"cpuid.coresPerSocket": "1",

"ethernet0.pciSlotNumber": "32",

"tools.syncTime": "0",

"time.synchronize.continue": "0",

"time.synchronize.restore": "0",

"time.synchronize.resume.disk": "0",

"time.synchronize.shrink": "0",

"time.synchronize.tools.startup": "0",

"time.synchronize.tools.enable": "0",

"time.synchronize.resume.host": "0",

"virtualhw.version": 13

},

"vmx_data_post": {

"ide0:0.present": "FALSE"

},

"vnc_disable_password": true,

"vnc_port_min": 5900,

"vnc_port_max": 5980

}

],

"provisioners": [

{

"environment_vars": [

"HOME_DIR=/home/vagrant"

],

"execute_command": "echo 'vagrant' | {{.Vars}} sudo -S -E sh -eux '{{.Path}}'",

"expect_disconnect": true,

"scripts": [

"{{template_dir}}/scripts/update.sh",

"{{template_dir}}/_common/motd.sh",

"{{template_dir}}/_common/sshd.sh",

"{{template_dir}}/scripts/networking.sh",

"{{template_dir}}/scripts/sudoers.sh",

"{{template_dir}}/scripts/vagrant.sh",

"{{template_dir}}/scripts/vmware.sh",

"{{template_dir}}/scripts/cleanup.sh"

],

"type": "shell"

}

],

"variables": {

"box_basename": "ubuntu-20.04",

"http_directory": "{{template_dir}}/http",

"build_timestamp": "{{isotime \"20060102150405\"}}",

"cpus": "2",

"disk_size": "65536",

"esxi_datastore": "",

"esxi_host": "",

"esxi_username": "",

"esxi_password": "",

"headless": "false",

"guest_additions_url": "",

"iso_checksum": "sha256:f11bda2f2caed8f420802b59f382c25160b114ccc665dbac9c5046e7fceaced2",

"iso_name": "ubuntu-20.04.1-legacy-server-amd64.iso",

"memory": "4096",

"mirror": "http://cdimage.ubuntu.com",

"mirror_directory": "ubuntu-legacy-server/releases/20.04/release",

"name": "ubuntu-20.04",

"no_proxy": "{{env `no_proxy`}}",

"preseed_path": "preseed.cfg",

"template": "ubuntu-20.04-amd64",

"version": "TIMESTAMP"

}

}

Now make sure your variables are set correctly in variables.json and run:

packer build -var-file variables.json ubuntu.jsonOnce that's complete, we should have a blank Ubuntu VM image on our ESXi server ready to deployed as we wish in the future.

Now we need to edit our Terraform config to include our new Ubuntu server, so moving to the terraform_files folder, open up main.tf and add this to the bottom:

# Logger

resource "esxi_guest" "logger" {

guest_name = "logger"

disk_store = var.esxi_datastore

guestos = "ubuntu-64"

boot_disk_type = "thin"

memsize = "4096"

numvcpus = "2"

resource_pool_name = "/"

power = "on"

clone_from_vm = "Ubuntu2004"

provisioner "remote-exec" {

inline = [

"sudo ifconfig eth0 up && echo 'eth0 up' || echo 'unable to bring eth0 interface up",

"sudo ifconfig eth1 up && echo 'eth1 up' || echo 'unable to bring eth1 interface up"

]

connection {

host = self.ip_address

type = "ssh"

user = "vagrant"

password = "vagrant"

}

}

# Bridge network

network_interfaces {

virtual_network = var.vm_network

mac_address = "00:50:56:a3:b1:c9"

nic_type = "e1000"

}

# This is the local network that will be used for VM comms

network_interfaces {

virtual_network = var.hostonly_network

mac_address = "00:50:56:a3:b2:c9"

nic_type = "e1000"

}

guest_startup_timeout = 45

guest_shutdown_timeout = 30

}In the last post I said we'd automate the Ansible inventory so we don't have to input the IP addresses of each host manually, so let's do that! When Terraform has finished provisioning the hosts, we can grab the output it returns by using an output file. So in terraform_files lets create output.tf:

output "logger_ips" {

value = esxi_guest.logger.ip_address

}

output "dc1_ips" {

value = esxi_guest.dc1.ip_address

}

output "workstation1_ips" {

value = esxi_guest.workstation1.ip_address

}

output "workstation2_ips" {

value = esxi_guest.workstation2.ip_address

}

resource "local_file" "AnsibleInventory" {

content = templatefile("./inventory.tmpl",

{

logger_ip = esxi_guest.logger.ip_address,

dc1_ip = esxi_guest.dc1.ip_address,

workstation1_ip = esxi_guest.workstation1.ip_address,

workstation2_ip = esxi_guest.workstation2.ip_address

}

)

filename = "../ansible_files/inventory.yml"

}In the top half of this file, we can see that we're outputting to the console the IP address of each host using <host>_ips key. In the bottom half, we're instructing Terraform to load a "template file" inventory.tmpl, and input those variables where required (kind of like a match and replace), before saving the file into our ansible_files directory as inventory.yml.

Lets create a template file for Terraform to use in inventory.tmpl:

---

logger:

hosts:

${logger_ip}:

ansible_user: vagrant

ansible_password: vagrant

ansible_port: 22

ansible_connection: ssh

ansible_ssh_common_args: '-o UserKnownHostsFile=/dev/null'

dc1:

hosts:

${dc1_ip}:

workstation1:

hosts:

${workstation1_ip}:

workstation2:

hosts:

${workstation2_ip}:

all:

vars:

loggerip: ${logger_ip}

Notice how we assigned a variable to all of the hosts called "loggerip" at the bottom there? This will be used later so we can reference that IP address in our playbook when we chop it into the ELK config file. If there's a better way to do this, I sure couldn't find it...

Now, lets build the infrastructure:

terraform init

terraform applyIf you're just updating your lab from the first post, it should only add the Ubuntu host and update the inventory as per our template.

Installing ELK

Now we've got our Ubuntu server up and running, we can set it up with ELK. To do this, first read Hausec's awesome blog. I used some 1337 skills to convert his instructions into an Ansible playbook, which we need to put in our ansible_files directory.

We can make an Ansible role playbook to set up our Ubuntu server with ELK by creating roles/logger/tasks/main.yml:

---

- name: Set hostname to logger

hostname:

name: logger

become: yes

- name: Set system clock

shell: hwclock --hctosys

become: yes

- name: Add GPG key for Elastic repo

shell: wget -qO - https://artifacts.elastic.co/GPG-KEY-elasticsearch | apt-key add -

become: yes

- name: Install transport-https

apt:

name: apt-transport-https

state: present

update_cache: yes

become: yes

- name: Add the repo

apt_repository:

repo: deb https://artifacts.elastic.co/packages/7.x/apt stable main

state: present

become: yes

- name: Install ELK

apt:

pkg:

- logstash

- openjdk-11-jre

- elasticsearch

- kibana

update_cache: yes

state: present

become: yes

- name: Enable ELK services

become: yes

shell: /bin/systemctl enable elasticsearch.service && /bin/systemctl enable kibana.service && /bin/systemctl enable logstash.service

- name: Edit Kibana config

become: yes

shell: |

sed -i 's/#server.host: "localhost"/server.host: "0.0.0.0"/g' /etc/kibana/kibana.yml; sed -i 's/#server.port: 5601/server.port: 5601/g' /etc/kibana/kibana.yml

- name: Edit Elastic config

become: yes

shell: |

sed -i 's/#network.host: 192.168.0.1/network.host: "0.0.0.0"/g' /etc/elasticsearch/elasticsearch.yml; sed -i 's/#http.port: 9200/http.port: 9200/g' /etc/elasticsearch/elasticsearch.yml

- name: Checking discovery type to Elastic config

become: yes

lineinfile:

path: /etc/elasticsearch/elasticsearch.yml

line: "discovery.type: single-node"

state: present

register: discoverytype

- name: Starting ELK services

become: yes

shell: service logstash start && service elasticsearch start && service kibana startNext we need to make a config file for our Winlogbeat installation, the easiest way I found to do this way to create the file locally on our provisioning host and then copying it over at... run time? ... play time? When we run our Playbook.

Let's create config/winlogbeat.yml:

# ======================== Winlogbeat specific options =========================

winlogbeat.event_logs:

- name: Application

ignore_older: 30m

- name: System

ignore_older: 30m

- name: Security

ignore_older: 30m

- name: Microsoft-Windows-Sysmon/Operational

ignore_older: 30m

- name: Windows PowerShell

event_id: 400, 403, 600, 800

ignore_older: 30m

- name: Microsoft-Windows-PowerShell/Operational

event_id: 4103, 4104, 4105, 4106

- name: Microsoft-Windows-WMI-Activity/Operational

event_id: 5857, 5858, 5859, 5860, 5861

- name: ForwardedEvents

tags: [forwarded]

# ====================== Elasticsearch template settings =======================

setup.template.settings:

index.number_of_shards: 1

# =================================== Kibana ===================================

setup.kibana:

host: "ELKIPHERE:5601"

# ---------------------------- Elasticsearch Output ----------------------------

output.elasticsearch:

hosts: ["ELKIPHERE:9200"]

username: "elastic"

password: "changeme"

pipeline: "winlogbeat-%{[agent.version]}-routing"

# ================================= Processors =================================

processors:

- add_host_metadata:

when.not.contains.tags: forwarded

- add_cloud_metadata: ~See what it says ELKIPHERE? We'll use magic later to replace it in the playbook below.

Now we need to create a playbook role that will install Winlogbeat on the Windows hosts we select. You can see this playbook in roles/monitor/tasks/main.yml:

---

- name: Downloading Winlogbeat

win_get_url:

url: https://artifacts.elastic.co/downloads/beats/winlogbeat/winlogbeat-8.0.0-windows-x86_64.zip

dest: C:\Windows\Temp\winlogbeat.zip

- name: Unzipping Winlogbeat archive

win_unzip:

src: C:\Windows\Temp\winlogbeat.zip

dest: C:\Windows\Temp

delete_archive: yes

- name: Copy Winlogbeat config file

win_copy:

src: config/winlogbeat.yml

dest: C:\Windows\Temp\winlogbeat-8.0.0-windows-x86_64\winlogbeat.yml

- name: Add in our ELK IP to config file

win_shell: |

(Get-Content "C:\Windows\Temp\winlogbeat-8.0.0-windows-x86_64\winlogbeat.yml").replace('ELKIPHERE', '{{ loggerip }}') | Set-Content -Path "C:\Windows\Temp\winlogbeat-8.0.0-windows-x86_64\winlogbeat.yml" -Encoding Ascii

- name: Install Winlogbeat

win_shell: C:\Windows\Temp\winlogbeat-8.0.0-windows-x86_64\install-service-winlogbeat.ps1

- name: Start Winlogbeat service

win_shell: Start-Service winlogbeatCool! Now all that's left to do is update our main playbook with our Ubuntu server, and add the monitor roles to our Windows hosts.

Let's make those changes in playbook.yml:

---

- hosts: logger

roles:

- logger

tags: logger

- hosts: dc1

strategy: free

roles:

- dc1

- monitor

tags: dc1

- hosts: workstation1

strategy: free

roles:

- workstation1

- common

- monitor

tags: workstation1

- hosts: workstation2

strategy: free

roles:

- workstation2

- common

- monitor

tags: workstation2Now all that's left to do is run the playbook and specify our new inventory file:

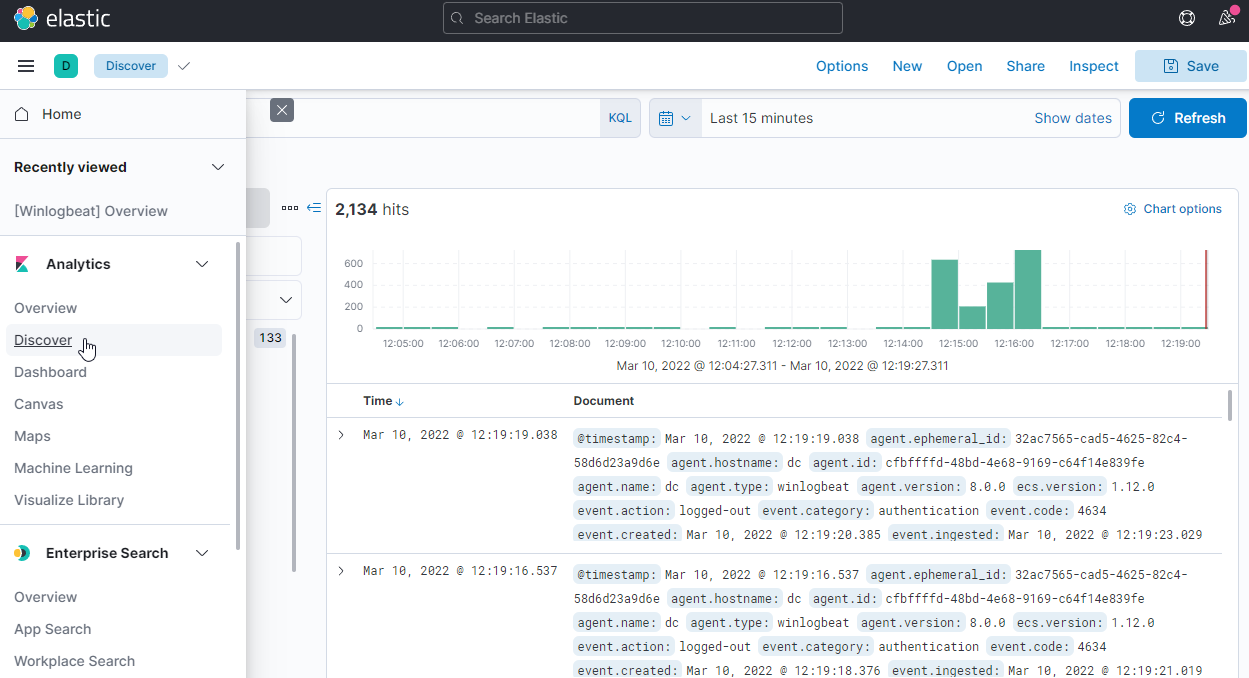

ansible-playbook -v playbook.yml -i inventory.ymlOnce that's done, we should be up and running! We can verify it all installed by browsing to our logger host on port 5601, where we should be greeted with the Elastic dashboard:

If we need to make changes to which events are forwarded, we can do that in the winlogbeat.yml config file from earlier.

And that's it! In a future post I'll be adding Sysmon, more servers such as File shares, and may even attempt to automate building an Exchange server if my blood pressure stabilises.

Happy hacking!