Automating a Red Team Lab (Part 1): Domain Creation

We'll go over how to build a basic AD setup using infrastructure-as-code to deploy a consistent lab every time.

If you're anything like me, you will tend to rip your test lab down and build it again at least once a day. Whilst this is definitely great fun and an efficient usage of your time, wouldn't it be amazing to just have a fire and forget script that will set it up exactly how you want, every time?

Luckily this is achievable using Infrastructure-as-Code! In this post, I'm going to go over how we can use Packer, Terraform and Ansible to go from an empty ESXi server to an up and running Windows domain in no time.

In case you wanted to play along at home, all the code in this post can be found on GitHub here.

Initial Setup

I'm going to assume that you already have an ESXi server set up at home, because what kind of Red Teamer are you if you haven't? Get out of here with your Cloud solutions.

We're going to need to enable SSH within ESXi, you can do this through the web interface - just remember to disable it again afterwards.

Connect to your ESXi server over SSH and enable GuestIPHack, honestly I have no idea what this does, but I've tried to do it without and it doesn't work:

esxcli system settings advanced set -o /Net/GuestIPHack -i 1

EDIT: It bugged me enough that I looked it up. It allows Packer to infer the IP of the VM from ESXi by using ARP packet inspection.

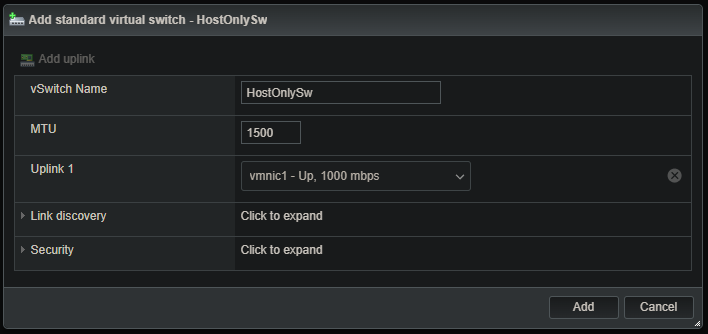

Next, we're going to need to make a new network for the domain to live on. We can configure this after we're up and running to isolate it from our home network.

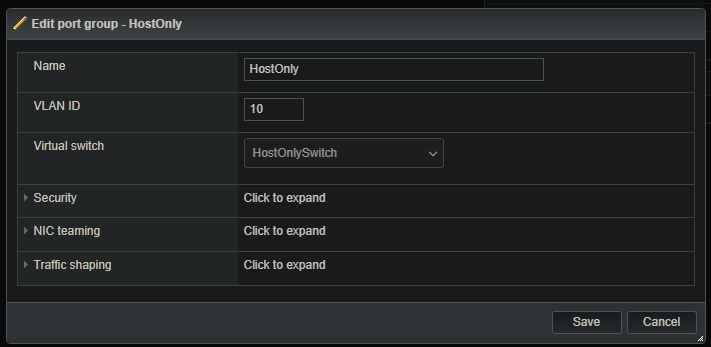

We'll create a virtual switch, then create a port group and assign it to that switch.

Networking > Virtual switches > Add standard virtual switch:

Networking > Port groups > Add port group:

OK, now we've got our ESXi server ready to go, we're going to need somewhere to provision it from. To make things easy, I'm going to use WSL - but any Linux based host will work too.

We'll need to install OVFTool, this is used for importing / exporting / managing VMs remotely from the command line. It's an awesome tool, but in order to download it you need to use the VMware website and you need to create an account because they are literally the worst people ever:

https://code.vmware.com/web/tool/4.4.0/ovf

Download the .bundle file, you can execute this as a script and it should install nicely.

We need a few other things, luckily these can all be installed without leaving the comfort of our terminal, you know, like civilized software packages. We'll need:

- Ansible

- Python3-WinRM

- SSHPass

- Terraform

- Packer

We'll need to add the Packer repo to our sources, but whatever you do don't install the version from the default Ubuntu repo like I did initially. It's old and broken just like my soul.

curl -fsSL https://apt.releases.hashicorp.com/gpg | sudo apt-key add -

sudo apt-add-repository "deb [arch=amd64] https://apt.releases.hashicorp.com $(lsb_release -cs) main"

sudo apt-get update && sudo apt-get install packer

sudo apt install ansible python3-winrm sshpass terraform

Done! Now we're ready to start VMing.

Packer: Making the VM Images

Packer is a tool for automating the building of VM images. The way it works is we create a JSON config file, providing a link to an ISO, some parameters for the machine (such as disk size, memory allocation etc) and it will go away and do some magic for us.

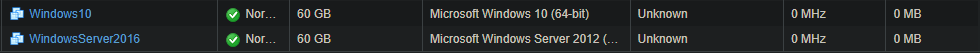

In this case, we're going to need a Windows 10 and Windows Server 2016 VM image. We can then deploy that image as many times as we want as we add more hosts.

There's plenty of Packer templates out there, some of them are old and don't work with the latest version of Packer so be wary, but if you read through a few you can get a feel for the kind of things that go in there. Here is a good example and here are some more.

I like to use the Packer config and scripts from DetectionLab because I know they work, and that's unusual for computer things. DetectionLab was what got me interested in IaC in the first place and is an awesome project, which you can read more about here. Check it out.

First up we need to point Packer at our ESXi server. Look in the packer_files directory, we're going to change variables.json:

{

"esxi_host": "192.168.X.X",

"esxi_datastore": "datastore_name_here",

"esxi_username": "root_yolo",

"esxi_password": "password_here",

"esxi_network_with_dhcp_and_internet": "VM Network"

}

The two main Packer config files we're going to be using are here:

- windows_10_esxi.json

- windows_2016_esxi.json

Important: The ISO listed in the DetectionLab version is for Windows 10 Enterprise Evaluation. If you downloaded the repo from my GitHub, it's for Windows 10 Pro. This is important, because it's not possible (to my knowledge) to upgrade the evaluation edition of Windows 10 to a fully licensed version, where as the Pro image can be licensed after the grace period expires. Good to know if you want to set up a more permanent lab.

You can change the base specification for each template in these here files, I like to give them 8GB of RAM to try and speed things up, but

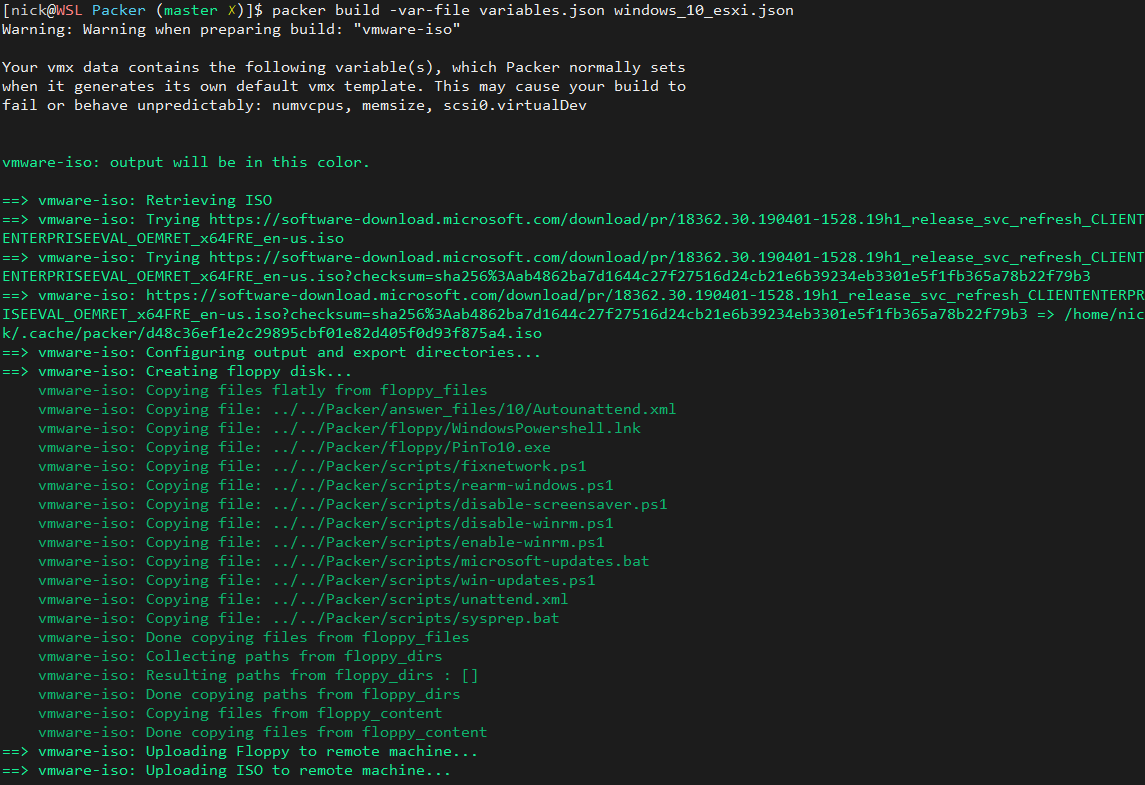

For this next stage it helps to use tmux (or screen, whatever), we can split the terminal in half so we can run each instance in parallel:

tmux

Ctrl + B

Shift + 5

In the first Window:packer build -var-file variables.json windows_10_esxi.json

In the second Window (Ctrl + B, Ctrl + Right):packer build -var-file variables.json windows_2016_esxi.json

And we're off! This will take some time, it's going to download the ISOs for each version of Windows from the MS website, then install them as a VM onto the ESXi server using OVFTool. Then it's going to run the system prep scripts listed in the packer config, shutdown the VM and turn it into a image:

This took 9 minutes on my server, but can take longer on your line speed and how much juice your server has:Build 'vmware-iso' finished after 9 minutes 3 seconds.

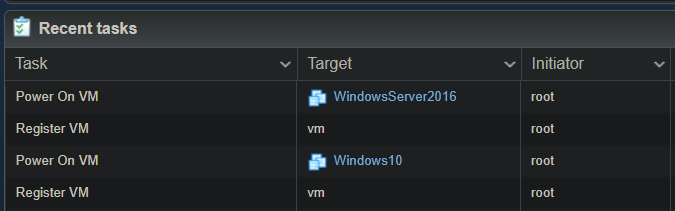

While this is running, you can switch to your ESXi console to see real life magic happening:

If you click on the individual VM names, you can see the console preview to try and get an idea what stage they're at in the provisioning process and make sure they haven't stalled on installing Windows or something similar, however the scripts from my repo definitely had no issues at time of writing.

You'll know it's complete when you have two new VMs that are in powered off state like so:

There we go, VM images complete. In case you didn't know how, you can exit tmux by pressing Ctrl + D.

Terraform: Deploy VMs from the Images

Next we're going to use Terraform. This will take the prepped VM image seed, plant them in the virtual garden and water them until they grow into beautiful blank Windows machines.

Let create some config files. In the terraform_files directory, edit variables.tf, In here we put:

variable "esxi_hostname" {

default = "<IP>"

}

variable "esxi_hostport" {

default = "22"

}

variable "esxi_username" {

default = "root"

}

variable "esxi_password" {

default = "<password>"

}

variable "esxi_datastore" {

default = "<datastore>"

}

variable "vm_network" {

default = "VM Network"

}

variable "hostonly_network" {

default = "HostOnly"

}

Now, ESXi isn't "officially" supported by Terraform, not without vSphere at least. So in order to get it to play nice, we need to use some magic. This is an unofficial provider that works like a charm, so kudos to the developers there.versions.tf:

terraform {

required_version = ">= 1.0.0"

required_providers {

esxi = {

source = "josenk/esxi"

version = "1.9.0"

}

}

}

main.tf is where the action happens and we can define our infrastructure. I've configured this to create a Windows 2016 server, and two Windows 10 hosts:

# Define our ESXi variables from variables.tf

provider "esxi" {

esxi_hostname = var.esxi_hostname

esxi_hostport = var.esxi_hostport

esxi_username = var.esxi_username

esxi_password = var.esxi_password

}

# Domain Controller

resource "esxi_guest" "dc1" {

guest_name = "dc1"

disk_store = var.esxi_datastore

guestos = "windows9srv-64"

boot_disk_type = "thin"

memsize = "8192"

numvcpus = "2"

resource_pool_name = "/"

power = "on"

clone_from_vm = "WindowsServer2016"

# This is the network that bridges your host machine with the ESXi VM

network_interfaces {

virtual_network = var.vm_network

mac_address = "00:50:56:a1:b1:c1"

nic_type = "e1000"

}

# This is the local network that will be used for VM comms

network_interfaces {

virtual_network = var.hostonly_network

mac_address = "00:50:56:a1:b2:c1"

nic_type = "e1000"

}

guest_startup_timeout = 45

guest_shutdown_timeout = 30

}

# Workstation 1

resource "esxi_guest" "workstation1" {

guest_name = "workstation1"

disk_store = var.esxi_datastore

guestos = "windows9-64"

boot_disk_type = "thin"

memsize = "8192"

numvcpus = "2"

resource_pool_name = "/"

power = "on"

clone_from_vm = "Windows10"

# This is the network that bridges your host machine with the ESXi VM

network_interfaces {

virtual_network = var.vm_network

mac_address = "00:50:56:a2:b1:c3"

nic_type = "e1000"

}

# This is the local network that will be used for VM comms

network_interfaces {

virtual_network = var.hostonly_network

mac_address = "00:50:56:a2:b2:c3"

nic_type = "e1000"

}

guest_startup_timeout = 45

guest_shutdown_timeout = 30

}

# Workstation 2

resource "esxi_guest" "workstation2" {

guest_name = "workstation2"

disk_store = var.esxi_datastore

guestos = "windows9-64"

boot_disk_type = "thin"

memsize = "8192"

numvcpus = "2"

resource_pool_name = "/"

power = "on"

clone_from_vm = "Windows10"

# This is the network that bridges your host machine with the ESXi VM

network_interfaces {

virtual_network = var.vm_network

mac_address = "00:50:56:a2:b1:c4"

nic_type = "e1000"

}

# This is the local network that will be used for VM comms

network_interfaces {

virtual_network = var.hostonly_network

mac_address = "00:50:56:a2:b2:c4"

nic_type = "e1000"

}

guest_startup_timeout = 45

guest_shutdown_timeout = 30

}

Now that we've defined it, it's time to build it. First, initialise terraform to download the ESXi provider defined in versions.tf, and run a plan to check we've configured our main.tf correctly and give our infrastructure a final check over:

[nick@WSL redlab ]$ terraform init

Initializing the backend...

Initializing provider plugins...

- Finding josenk/esxi versions matching "1.9.0"...

- Installing josenk/esxi v1.9.0...

- Installed josenk/esxi v1.9.0 (self-signed, key ID A3C2BB2C490C3920)

Partner and community providers are signed by their developers.

If you'd like to know more about provider signing, you can read about it here:

https://www.terraform.io/docs/cli/plugins/signing.html

Terraform has created a lock file .terraform.lock.hcl to record the provider

selections it made above. Include this file in your version control repository

so that Terraform can guarantee to make the same selections by default when

you run "terraform init" in the future.

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.

[nick@WSL redlab ]$ terraform plan

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# esxi_guest.dc1 will be created

+ resource "esxi_guest" "dc1" {

+ boot_disk_size = (known after apply)

+ boot_disk_type = "thin"

+ clone_from_vm = "WindowsServer2016"

+ disk_store = "datastore"

+ guest_name = "dc1"

+ guest_shutdown_timeout = 30

+ guest_startup_timeout = 45

+ guestos = "windows9srv-64"

+ id = (known after apply)

+ ip_address = (known after apply)

+ memsize = "8192"

+ notes = (known after apply)

+ numvcpus = "2"

+ ovf_properties_timer = (known after apply)

+ power = "on"

+ resource_pool_name = "/"

+ virthwver = (known after apply)

+ network_interfaces {

+ mac_address = "00:50:56:a1:b1:c1"

+ nic_type = "e1000"

+ virtual_network = "VM Network"

}

+ network_interfaces {

+ mac_address = "00:50:56:a1:b2:c1"

+ nic_type = "e1000"

+ virtual_network = "HostOnly"

}

}

# esxi_guest.workstation1 will be created

+ resource "esxi_guest" "workstation1" {

+ boot_disk_size = (known after apply)

+ boot_disk_type = "thin"

+ clone_from_vm = "Windows10"

+ disk_store = "datastore"

+ guest_name = "workstation1"

+ guest_shutdown_timeout = 30

+ guest_startup_timeout = 45

+ guestos = "windows9-64"

+ id = (known after apply)

+ ip_address = (known after apply)

+ memsize = "8192"

+ notes = (known after apply)

+ numvcpus = "2"

+ ovf_properties_timer = (known after apply)

+ power = "on"

+ resource_pool_name = "/"

+ virthwver = (known after apply)

+ network_interfaces {

+ mac_address = "00:50:56:a2:b1:c3"

+ nic_type = "e1000"

+ virtual_network = "VM Network"

}

+ network_interfaces {

+ mac_address = "00:50:56:a2:b2:c3"

+ nic_type = "e1000"

+ virtual_network = "HostOnly"

}

}

# esxi_guest.workstation2 will be created

+ resource "esxi_guest" "workstation2" {

+ boot_disk_size = (known after apply)

+ boot_disk_type = "thin"

+ clone_from_vm = "Windows10"

+ disk_store = "datastore"

+ guest_name = "workstation2"

+ guest_shutdown_timeout = 30

+ guest_startup_timeout = 45

+ guestos = "windows9-64"

+ id = (known after apply)

+ ip_address = (known after apply)

+ memsize = "8192"

+ notes = (known after apply)

+ numvcpus = "2"

+ ovf_properties_timer = (known after apply)

+ power = "on"

+ resource_pool_name = "/"

+ virthwver = (known after apply)

+ network_interfaces {

+ mac_address = "00:50:56:a2:b1:c4"

+ nic_type = "e1000"

+ virtual_network = "VM Network"

}

+ network_interfaces {

+ mac_address = "00:50:56:a2:b2:c4"

+ nic_type = "e1000"

+ virtual_network = "HostOnly"

}

}

Plan: 5 to add, 0 to change, 0 to destroy.

Run terraform apply and enter "yes" when prompted to get things moving.

Again this is going to take a while, so grab a coffee and toast some marshmallows on whatever device is running ESXi in your house.

Once this is done, I HIGHLY recommend taking a VM snapshot of each host, just in case. Accidents happen...

Ansible: Provision the Hosts

Whew! Almost there, now we just need to configure the hosts with Ansible. Ansible is a tool that connects to hosts asynchronously and provisions then using remote management tools, in this case we're using WinRM because slow and unpredictable is the best.

To do this, we need a few things. first, we have to do something manually and create an inventory file (for now at least, we can automate this step at a later time, but lets keep it "simple"). Ansible uses this file to find hosts on the network. Grab the IPs for each host from the ESXi console, and put them into the following file:

inventory.yml:

---

dc1:

hosts:

0.0.0.0:

workstation1:

hosts:

0.0.0.0:

workstation2:

hosts:

0.0.0.0:

Next lets create a variables file with our passwords, users, domain name and such. Make a directory called group_vars and add some values to all.yml:

ansible_user: vagrant

ansible_password: vagrant

ansible_port: 5985

ansible_connection: winrm

ansible_winrm_transport: basic

ansible_winrm_server_cert_validation: ignore

domain_name: hacklab.local

domain_admin_user: overwatch

domain_admin_pass: DomainAdminPass1!

domain_user_user: alice

domain_user_pass: AlicePass1!

exchange_domain_user: '{{ domain_admin_user }}@{{ domain_name | upper }}'

exchange_domain_pass: '{{ domain_admin_pass }}'

Obviously these can be changed to your requirement.

Ansible uses YAML files to run instructions on hosts, these sets of instructions are called playbooks. We can assign different playbooks to different hosts, to provision them as we see fit. For example, we want to set up a Domain Controller and two Windows 10 workstations, so we can create a playbook for both of those use cases.

Make the following directory structure/file:

roles/dc1/tasks/main.yml

Then we can add the following set of instructions to configure the host as a domain controller:

---

- name: Disable Windows Firewall

win_shell: "Set-NetFirewallProfile -Profile Domain,Public,Private -Enabled False"

- name: Hostname -> DC

win_hostname:

name: dc

register: res

- name: Reboot

win_reboot:

when: res.reboot_required

- name: Disable password complexity

win_security_policy:

section: System Access

key: PasswordComplexity

value: 0

- name: Set Local Admin Password

win_user:

name: Administrator

password: '{{ domain_admin_pass }}'

state: present

groups_action: add

groups:

- Administrators

- Users

ignore_errors: yes

- name: Set HostOnly IP address

win_shell: "If (-not(Get-NetIPAddress | where {$_.IPAddress -eq '192.168.56.100'})) {$adapter = (get-netadapter | where {$_.MacAddress -eq '00-50-56-A1-B2-C1'}).Name; New-NetIPAddress –InterfaceAlias $adapter –AddressFamily IPv4 -IPAddress 192.168.56.100 –PrefixLength 24 -DefaultGateway 192.168.56.1 } Else { Write-Host 'IP Address Already Created.' }"

- name: Set DNS server

win_shell: "$adapter = (get-netadapter | where {$_.MacAddress -eq '00-50-56-A1-B2-C1'}).Name; Set-DnsClientServerAddress -InterfaceAlias $adapter -ServerAddresses 127.0.0.1,8.8.8.8"

- name: Check Variables are Configured

assert:

that:

- domain_name is defined

- domain_admin_user is defined

- domain_admin_pass is defined

- name: Install Active Directory Domain Services

win_feature: >

name=AD-Domain-Services

include_management_tools=yes

include_sub_features=yes

state=present

register: adds_installed

- name: Install RSAT AD Admin Center

win_feature:

name: RSAT-AD-AdminCenter

state: present

register: rsat_installed

- name: Rebooting

win_reboot:

reboot_timeout_sec: 60

- name: Create Domain

win_domain:

dns_domain_name: '{{ domain_name }}'

safe_mode_password: '{{ domain_admin_pass }}'

register: domain_setup

- name: Reboot After Domain Creation

win_reboot:

when: domain_setup.reboot_required

- name: Create Domain Admin Account

win_domain_user:

name: '{{ domain_admin_user }}'

upn: '{{ domain_admin_user }}@{{ domain_name }}'

description: '{{ domain_admin_user }} Domain Account'

password: '{{ domain_admin_pass }}'

password_never_expires: yes

groups:

- Domain Admins

- Enterprise Admins

- Schema Admins

state: present

register: pri_domain_setup_create_user_result

retries: 30

delay: 15

until: pri_domain_setup_create_user_result is successful

- name: Create Domain User Account

win_domain_user:

name: '{{ domain_user_user }}'

upn: '{{ domain_user_user }}@{{ domain_name }}'

description: '{{ domain_user_user }} Domain Account'

password: '{{ domain_user_pass }}'

password_never_expires: yes

groups:

- Domain Users

state: present

register: create_user_result

retries: 30

delay: 15

until: create_user_result is successful

- name: Verify Account was Created

win_whoami:

become: yes

become_method: runas

vars:

ansible_become_user: '{{ domain_admin_user }}@{{ domain_name }}'

ansible_become_pass: '{{ domain_admin_pass }}'

Next in roles/common/tasks/main.yml we can configure a generic "common" playbook that should be run on several hosts (in our case, on the Workstations):

---

- name: Disable Windows Firewall

win_shell: "Set-NetFirewallProfile -Profile Domain,Public,Private -Enabled False"

- name: Install git

win_chocolatey:

name: git

state: present

- name: Check Variables are Set

assert:

that:

- domain_admin_user is defined

- domain_admin_pass is defined

- name: Join Host to Domain

win_domain_membership:

dns_domain_name: '{{ domain_name }}'

domain_admin_user: '{{ domain_admin_user }}@{{ domain_name }}'

domain_admin_password: '{{ domain_admin_pass }}'

state: domain

register: domain_join_result

- name: Reboot Host After Joining Domain

win_reboot:

when: domain_join_result.reboot_required

- name: Test Domain User Login

win_whoami:

become: yes

become_method: runas

vars:

ansible_become_user: '{{ domain_user_user }}@{{ domain_name}}'

ansible_become_password: '{{ domain_user_pass }}'

And then we create a playbook that sets up the IP configuration of each workstation. This feels kind of manual and laborious way of assigning a static IP and setting a hostname, but I couldn't figure out a better way. Further down the line we can use these files so that they can configure each host with different tools or whatever on them. In roles/workstation1/tasks/main.yml:

---

- name: Hostname -> workstation1

win_hostname:

name: workstation1

register: res

- name: Reboot

win_reboot:

when: res.reboot_required

- name: Set HostOnly IP Address

win_shell: "If (-not(get-netipaddress | where {$_.IPAddress -eq '192.168.56.110'})) {$adapter = (get-netadapter | where {$_.MacAddress -eq '00-50-56-A2-B2-C3'}).Name; New-NetIPAddress –InterfaceAlias $adapter –AddressFamily IPv4 -IPAddress 192.168.56.110 –PrefixLength 24 -DefaultGateway 192.168.56.1 } Else { Write-Host 'IP Address Already Created.' }"

- name: Set HostOnly DNS Address

win_shell: "$adapter = (get-netadapter | where {$_.MacAddress -eq '00-50-56-A2-B2-C3'}).Name; Set-DnsClientServerAddress -InterfaceAlias $adapter -ServerAddresses 192.168.56.100,8.8.8.8"

Workstation2: roles/workstation2/tasks/main.yml:

---

- name: Hostname -> workstation2

win_hostname:

name: workstation2

register: res

- name: Reboot

win_reboot:

when: res.reboot_required

- name: Set HostOnly IP Address

win_shell: "If (-not(get-netipaddress | where {$_.IPAddress -eq '192.168.56.111'})) {$adapter = (get-netadapter | where {$_.MacAddress -eq '00-50-56-A2-B2-C4'}).Name; New-NetIPAddress –InterfaceAlias $adapter –AddressFamily IPv4 -IPAddress 192.168.56.111 –PrefixLength 24 -DefaultGateway 192.168.56.1 } Else { Write-Host 'IP Address Already Created.' }"

- name: Set HostOnly DNS Address

win_shell: "$adapter = (get-netadapter | where {$_.MacAddress -eq '00-50-56-A2-B2-C4'}).Name; Set-DnsClientServerAddress -InterfaceAlias $adapter -ServerAddresses 192.168.56.100,8.8.8.8"

The static IPs are assigned based on their MAC address which is set up through Terraform, it seems like a kind of convoluted way to do it but it works.

Finally, we need to create a master playbook to tie everything together. This will be the file we execute with Ansible and will run all the various tasks we've assigned to each role.

In our top directory, lets create playbook.yml:

---

- hosts: dc1

strategy: free

gather_facts: False

roles:

- dc1

tags: dc1

- hosts: workstation1

strategy: free

gather_facts: False

roles:

- workstation1

- common

tags: workstation1

- hosts: workstation2

strategy: free

gather_facts: False

roles:

- workstation2

- common

tags: workstation2

Then let's fire it off with:ansible-playbook -v playbook.yml -i inventory.yml

Now kick back and wait until your domain is configured for you. If everything goes to plan, take a snapshot and play away!

If anything ever breaks in the lab, you can delete the VMs and start again from the Terraform stage, or if you snapshotted them just roll it back.

And that's it. In a future post, I'll go over adding in some detection software that feed data back to a SIEM solution such as Splunk or ELK. In the meantime, I recommend checking out:

https://hausec.com/2021/03/04/creating-a-red-blue-team-home-lab/

Happy hacking!